The threat of cyberattacks is at an alarming rate and significantly impacting companies across all industries. As the automotive industry makes huge strides toward a fully autonomous future, its driverless car security issues are becoming even more complex and critical.

The apparent risks, such as hacked autonomous car crashing and abrupt braking, are just the tip of the iceberg. Driverless cars could hold massive amounts of users’ data, making them a lucrative target for cybercriminals. In addition, the compromised vehicle could create safety issues for drivers and other road users.

Take, for instance, the driverless Tesla that crashed and burned for four hours, killing two passengers, in Texas and the autonomous Uber car that killed a pedestrian in Arizona. These kinds of incidents smash the reputation of autonomous cars.

Fortunately, these were just manufacturing errors. One can only imagine the horrific outcomes that will ensue if cybercriminals break the code.

All these factors harbor doubt about the security of the AI systems implemented in autonomous vehicles. However, one ought not to jump to conclusions without a holistic understanding. So, let’s deep dive into some AI-driven, driverless car security that is most vulnerable to cyberattacks.

Read More: Top 38 US Cybersecurity Conferences to Participate in 2021!

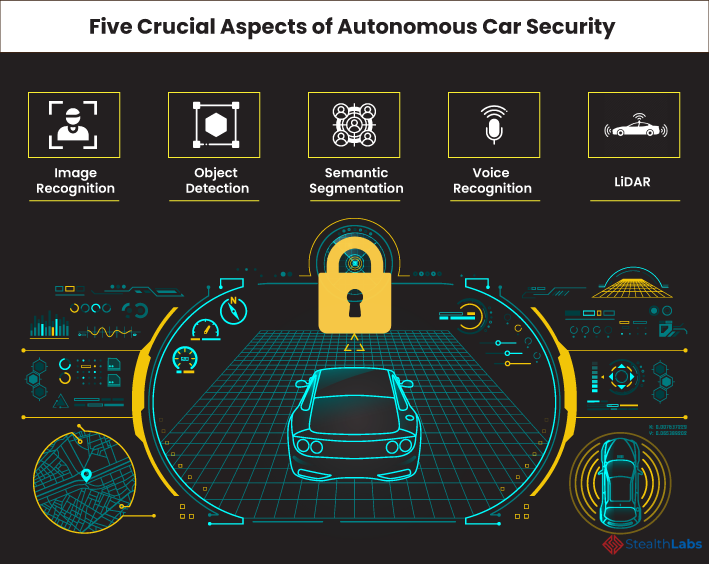

Five Crucial Aspects of Driverless Car Security

1) Image Recognition

Image recognition systems use a deep learning algorithm to identify and classify images such as road signs. A group of researchers demonstrated how image recognition systems could be deceived with the help of unique stickers and graffiti. These images belonged to one category. However, the AI systems incorrectly identified them as images from another category.

So, slight alterations to an image can result in widely different interpretations from image recognition systems. In the demonstration, with subtle changes to a ‘stop sign’ with spray paint and some stickers, the image recognition system misclassified it as a ‘speed limit sign.’ To prevent this, the classifiers used in autonomous cars must be highly sophisticated and robust. The best defense is to leverage multi-modal systems for image recognition. Instead of relying on one recognition system, whether LiDAR, radar sensors, or cameras, it is wise to use them all at once.

2) Object Detection

The same as the deep learning algorithm, the object detection algorithm can be outwitted by adversarial attacks. Researchers from the University of Central Florida conducted an intriguing experiment that used a camouflage pattern to hide vehicles from being detected by the system.

These sorts of adversarial threats are very lethal for the users as they are very valuable and impactful from the perspective of malicious actors. Moreover, when compared with the stop sign, it is legal in the United States of America to paint a car, while altering a stop sign is illicit. This aspect poses a significant threat to self-driving cars as anyone can alter public machine learning-based systems legally.

Also Read: How to Implement ‘Cybersecurity’ in Your Project from the Beginning?

3) Semantic Segmentation

The purpose of the semantic segmentation system in autonomous cars is to classify each pixel in an image into a pre-determined class. This enables driverless vehicles to recognize lanes, traffic lights, street signs, highways, and other vital information.

However, these AI-based systems are also at risk of adversarial attacks. Researchers from Tencent Keen Security Lab demonstrated how autonomous cars could be deceived by placing several stickers on the road that create a ‘fake lane.’

During the demonstration, the car recognized the stickers and switched into a fake lane that could have otherwise been a fatal accident.

4) Voice Recognition

Alas, image perception is not the only attack vector against self-driving vehicles. Voice and speech recognition systems are vulnerable to adversarial voice commands, which may cause undesired behaviors or even accidents in driverless cars.

Security researchers from Zhejiang University in China invented the DolphinAttack. It can send malicious inaudible voice commands to voice recognition systems. They demonstrated how sending an advertising message containing an adversarial voice command to the in-vehicle radio could create chaos on the roads.

5) LiDAR

Light Detection and Ranging (LiDAR) systems in autonomous vehicles measure the distance to a surrounding object using light signals. Unfortunately, these systems are also not foolproof. Researchers from Michigan University demonstrated how to compromise a LiDAR-based perception system. By strategically spoofing the LiDAR sensor signals, the researchers are able to deceive the vehicle’s LiDAR system into “seeing” a nonexistent obstacle. As a result, this could trick the vehicle into braking abruptly and cause accidents or traffic blocks.

Securing The Road Ahead

As automakers are pushing rapidly towards connected vehicles, those efforts could be for naught without a holistic approach to cybersecurity. The industry must embrace a comprehensive approach to cybersecurity that makes the new mobility ecosystem secure, vigilant, and resilient.

By taking the hard-earned lessons from other industries that have earlier grappled with securing digital infrastructure, the automotive industry can stay ahead of cyberthreats.

More Articles: